This month T-SQL Tuesday is hosted by Mickey Steuwe (blog/@SQLMickey)

and her topic of choice is “Data

Modeling Gone Wrong.”

(If you don’t know about T-SQL Tuesday check out the

information here

– each month there is a new topic and it is a great excuse to write each month (and to start writing!) because someone offers a topic, so you already have taken the first step!).

My first memory of a data modeling problem comes to data

types and the process of choosing appropriate data types - not because of storage concerns (although there can be problems) but because of actual performance risks.

When the data type of a field in a table doesn’t precisely

match the datatype of your query (or parameter of your stored procedure) a

conversion occurs. Conversions can be

implicit (automatic) or explicit (requiring an inline CONVERT or CAST statement

in the query).

Here is a chart from the Technet article

on Data Type Conversions:

As you can see, a conversion from a CHAR to an NVARCHAR is “implicit”

– so no big deal, right?

WRONG!

When I started as a DBA, we designed a system for payment

processing for a university debit card system (an internal system, not a

VISA/MC branded card at that time). Most

of us that were involved were relatively new to the design process, so we

decided to use NVARCHAR for all of our character fields. We decided it would be useful in a couple of

ways:

- It was a standard – everything was the same (my 16-year-experienced self now knows that isn’t a great definition of “standard”)

- It allowed us to handle any eventual contingency – maybe we would need Unicode data, right?

At the beginning there wasn’t any visible impact – we only had

a few thousand accounts and didn’t notice any issues.

Then we got to Fall Rush…

If you are familiar with American Universities, you know that

campus bookstores make 80%-90% of their profit during the first week of each

semester and often the week before. (This

is probably true at many international universities as well.) One of the

primary uses of our internal card was textbook purchasing, so we were slammed

during this time period, both in terms of overall quantity of transactions and

rapidity of transactions during the business day.

When we hit this period, we saw performance dip. At first we

assumed it was just load (remember this was SQL Server 7.0 and then 2000) but

we quickly realized that there was slag in our queries that wasn’t needed – we saw

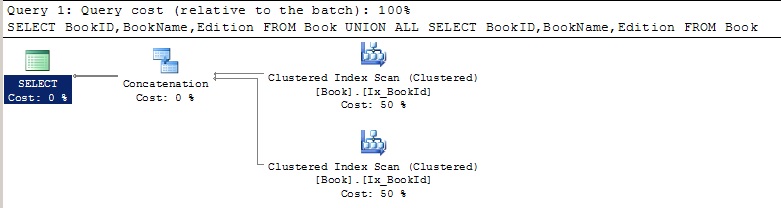

this in our query plan:

Why did we have a SCAN instead of a SEEK?

In a modern query plan this is visible in the predicate as a

CONVERT_IMPLICIT:

Even in our inexperienced state we knew one of those key

rules of query optimizations:

SCAN BAD! (In your best Frankenstein voice)

There are two ways to deal with an issue like this – you can

fix the design of the table, or you can modify your code to perform an explicit CAST or

CONVERT of the statement. The best fix (“best”)

is to fix the design of the table – Aaron Bertrand (blog/@AaronBertrand) has a good list of

suggestions

here for fields that can often be set to a smaller data type.

If you can’t change the table design (as you very often can’t)

the other option is to modify the code to prevent an implicit conversion. The first method is like this:

DECLARE @CardID as NVARCHAR(10)

SET @CardID = ‘123ABC456’

SELECT CardID, LastName, FirstName,

Balance

FROM Credit

WHERE CardID = @CardID

In this case the CardID field in the Credit table is of the

NVARCHAR data type (as in my debit card scenario above). To get rid of the implicit conversion I am

using a variable that is NVARCHAR to “pre-perform” the conversion so that by

the time the query runs it is comparing apples to apples (or in this case

NVARCHAR to NVARCHAR).

Another way to accomplish this is by using a CAST/CONVERT,

like this:

SELECT CardID, LastName, FirstName,

Balance

FROM Credit

WHERE CardID = CAST(‘value’ as

NVARCHAR)

--

This seems like a minor detail but it can be a very

important one, especially in systems with lots of rows and/or high transaction

rates.

--

Your homework - there is a great test harness for this

problem created by Jonathan Kehayias (blog/@sqlpoolboy) in a blog

post at SQLPerformance.com including charts that quantify the performance

hit and CPU costs. Check it out!

I think it would be easier to make the comparison a Unicode comparison by prefixing the string with an N, e. g., WHERE CardID = N'123ABC456'. Of course every nvarchar string comparison would need to have this prefixing to avoid implicit conversion. Maybe it is something to reserve for where it will actually be needed. Thanks for the post. I have a coworker that told me he defines everything nvarchar, just to be prepared. I think this will help change his mind.

ReplyDeleteI did a test on my own SQL Server 2012 system where the PK is 3 columns and I have almost 300K rows. I defined the key columns as nvarchar and then did retrievals using comparisons with and without implicit conversions on combinations of columns. In all cases an index seek was done, It was just a matter of whether it was doing the seek on the implicitly converted value or the unconverted values when they were flagged as Unicode. I expect there is something else going on, maybe with FK constraints. I know the problem of trying to simplify a complex system for publication.

ReplyDelete