|

| https://i.imgflip.com/jaq5c.jpg |

As you probably already know, the key flaw to percentage-based FILEGROWTH is that over time the increment grows larger and larger, causing the actual growth itself to take longer and longer. This is especially an issue with LOG files because they have to be zero-initialized before they can be used, causing excessive I/O and file contention while the growth is in progress. Paul Randal (blog/@PaulRandal) describes why this is the case in this blog post. (If you ever get a chance to see it Paul also does a fun demo in some of his classes and talks on why zero initialization is importan, using a hex editor to read the underlying contents of disk even after the old data is "deleted")

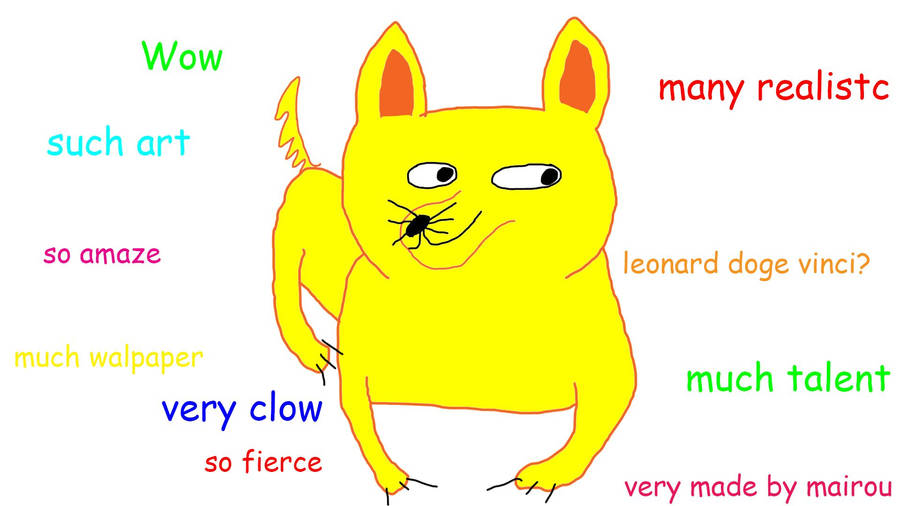

As I mentioned above the catch to percentage-based growth is the ever-growing increment:

In the image on the left you can see that after 20 growths at 10% you are now growing at 313MB at a time. By 30 growths (not pictured) the increment is 812MB - getting close to the 1GB mark. Depending on the speed of your storage this can cause significant delay.

Another related issue is Virtual Log Files (VLF's) which I discuss here. It is important to have a meaningful increment so that the growth isn't *too* small relative to the file size.

How do we fix this? I found a script (and modified it of course) to generate the ALTER DATABASE statements to set the FILEGROWTH increment so a size fair to the individual file's current size, based on a table shown in the script:

--

/*

FILEGROWTH Reset

Submitted by Andy Galbraith

02/04/2016

Script to reset all FILEGROWTH to a fixed increment based on their current size:

CurrentSize<1GB = 16MB

1GB<=CurrentSize<5GB = 128MB

5GB<=CurrentSize<100GB = 256MB

CurrentSize>=100GB = 512MB

Actual queries are about two-thirds of the way down at 'SET @Query' if you want to modify the size parameters

Modified from a script at http://www.sqlservercentral.com/scripts/Administration/99339/

Tested on MSSQL 2005/2008/2008R2/2012/2014

*/

SET NOCOUNT ON

USE master

GO

/* Create a Table for Database File Info */

IF OBJECT_ID('tempdb..#ConfigAutoGrowth') IS NOT NULL

DROP TABLE #ConfigAutoGrowth

CREATE TABLE #ConfigAutoGrowth

(

DatabaseID INT,

DBName SYSNAME,

LogicalFileName VARCHAR(max),

FileSizeinGB decimal(10,2),

GrowthOption VARCHAR(12)

)

/* Load the Database File Table */

INSERT INTO #ConfigAutoGrowth

SELECT

SD.database_id,

SD.name,

SF.name,

sf.size*8/1024.0/1024.0 as FileSizeinGB,

CASE SF.status & 0x100000

WHEN 1048576 THEN 'Percentage'

WHEN 0 THEN 'MB'

END AS 'GROWTH Option'

FROM SYS.SYSALTFILES SF

JOIN SYS.DATABASES SD

ON SD.database_id = SF.dbid

/* Variable and Cursor Declarations */

DECLARE @name VARCHAR ( max ) /* Database Name */

DECLARE @DatabaseID INT /* Database ID */

DECLARE @LogicalFileName VARCHAR ( max ) /* Database Logical file name */

DECLARE @FileSizeinGB DECIMAL(10,2) /* Current File Size in GB */

DECLARE @GrowthOption VARCHAR ( max ) /* Current FILEGROWTH Type */

DECLARE @Query VARCHAR(max) /* Dynamic Query */

DECLARE DBCursor CURSOR FOR

SELECT DatabaseID, DBName, LogicalFileName, FileSizeinGB, GrowthOption

FROM #ConfigAutoGrowth

OPEN DBCursor

FETCH NEXT FROM DBCursor

INTO @DatabaseID,@name,@LogicalFileName,@FileSizeinGB, @GrowthOption

WHILE @@FETCH_STATUS = 0

BEGIN

PRINT 'Changing AutoGrowth option for database ['+ UPPER(@name) +'] - current file size ' + cast (@FileSizeinGB as varchar)+'GB'

IF @FileSizeinGB<1

SET @Query= 'ALTER DATABASE ['+ @name +'] MODIFY FILE (NAME = ['+@LogicalFileName+'],FILEGROWTH = 16MB)'--,MAXSIZE=UNLIMITED)'

IF @FileSizeinGB>=1 and @FileSizeinGB<5

SET @Query= 'ALTER DATABASE ['+ @name +'] MODIFY FILE (NAME = ['+@LogicalFileName+'],FILEGROWTH = 128MB)'--,MAXSIZE=UNLIMITED)'

IF @FileSizeinGB>=5 and @FileSizeinGB <100

SET @Query= 'ALTER DATABASE ['+ @name +'] MODIFY FILE (NAME = ['+@LogicalFileName+'],FILEGROWTH = 256MB)'--,MAXSIZE=UNLIMITED)'

IF @FileSizeinGB>=100

SET @Query= 'ALTER DATABASE ['+ @name +'] MODIFY FILE (NAME = ['+@LogicalFileName+'],FILEGROWTH = 512MB)'--,MAXSIZE=UNLIMITED)'

PRINT @Query

--EXECUTE(@Query)

FETCH NEXT FROM DBCursor

INTO @DatabaseID,@name,@LogicalFileName,@FileSizeinGB,@GrowthOption

END

CLOSE DBCursor

DEALLOCATE DBCursor

DROP TABLE #ConfigAutoGrowth

GO

--

SELECT

SD.database_id,

SD.name,

SF.name,

CASE SF.status & 0x100000

WHEN 1048576 THEN 'Percentage'

WHEN 0 THEN 'MB'

END AS 'GROWTH Option'

,size*8.0/1024 as SizeinMB

,growth*8.0/1024.0 as Growth

FROM SYS.SYSALTFILES SF

JOIN

SYS.DATABASES SD

ON

SD.database_id = SF.dbid

GO

--

By default the script simply PRINT's out the ALTER DATABASE statements for you to copy-paste to another window and execute, but you can un-comment out the "EXECUTE (@Query)" statement and the script will automatically enact the changes.

Hope this helps!